Get Coverage Reports From a Flask Application Running Inside Docker Container

Published on Jul 17, 2022 by Diego Rodriguez Mancini.

Introduction

Sometimes when you are making Acceptance Tests or Behavior Tests for the outer end of a library or application you need to run tests against a built instance of your app. These tests usually simulate what an end user would do, so it is expected that the tests run with a “real” instance of the running product.

This type of testing can be accompanied with a coverage report. Coverage tools analyze which parts of the code get executed when you run your tests and create a report at the end of the test runs. But how do you get coverage reports for a long running process like a Flask app ?

By using the coverage package with its programatic API we can start listening for coverage when the Flask app runs and then produce the report when it stops. In this post I show the key elements needed to run the Flask app with coverage reports and then save them on process exit after all tests have finished.

Create the application with coverage

The app initialization is simple, we just use an app extension that starts the coverage service and then saves it in a defined location at exit.

from flask import Flask from coverage_extension import Coverage app = Flask(__name__) if os.getenv("EXAMPLES_TESTING"): Coverage(app)

The Coverage class follows the regular Flask extensions convention. We use atexit to trigger a coverage.save() method when the process exits. We finally generate an xml report on a predefined location.

import atexit class Coverage(object): def __init__(self, app=None): self.app = app if app is not None: self.init_app(app) def init_app(self, app): import coverage app.logger.info("Using Coverage Extension") app.coverage = coverage.Coverage( data_file="/coverage-output/.coverage", source=["src/"] ) app.coverage.start() atexit.register(self.save_coverage) def save_coverage(self, *args, **kwargs): self.app.logger.info("Saving Coverage Reports") self.app.coverage.stop() self.app.coverage.save() self.app.coverage.xml_report(outfile="/coverage-output/coverage.xml")

So as long as our application is running, a coverage file will be generating at /coverage-output/.coverage. Every request will be included in the coverage report so after starting this server we can start making request from our tests and then, when we are ready, stop the app and the generated report will be at the location.

Remember that the location you choose needs to be mounted later when you run the app inside a container

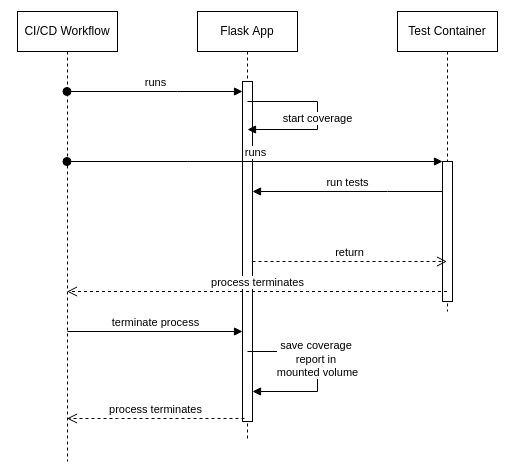

The CI/CD process

For this particular example I ran the tests from within a separate container, with the aplication running in a container exactly like a production build, but with the coverage reports activated (coverage doesn’t run on the production build, for obvious reasons).

I’m not going to go into the details of the content of the containers or the workflow code. The important thing here is that we need to exit the app process adequately, that is, when we are sure that all tests are finished. In my case I run a killall gunicorn command from within the flask app container, since I’m running it with gunicorn in the entrypoint it will stop the container as well and trigger the atexit method we defined before.

Conclusion

By using the coverage package’s programatic API, we can make coverage reports for a long running process like a Flask application. We start the coverage process at the time we initialize our Flask app and then every operation will be logged in the final report. This is useful to perform multiple types of tests, it could be for Acceptance tests, functional tests, etc. I think a very interesting use case for this could be for usability testing, where you deploy a version of your app that analyzes coverage from real user usage, or even use it for telemetry and track usability as well, as you can analyze which modules get or don’t get accessed by your users.